Litmus is an open-source tool to do chaos experiments on Kubernetes clusters, introducing unexpected failures to a system to test its resiliency. With Litmus, you can actually create chaos experiments, find bugs fast, and fix them before they ever reach the production phase. It turns out to be a great way to make a Kubernetes cluster more resilient. In this tutorial I will walk you through the Litmus installation process on a Kubernetes cluster, and create/run the below experiments on it:

- Pod Deletion

- Pod Autoscaler

Before we get into set up and what Litmus can do, be sure to check out our video guide...

Chaos Engineering for Kubernetes with Litmus

We look at chaos engineering for Kubernetes using Litmus, and how it can help you find bugs and vulnerabilities in your production clusters so you apply fixes - before they become a problem.Prerequisites

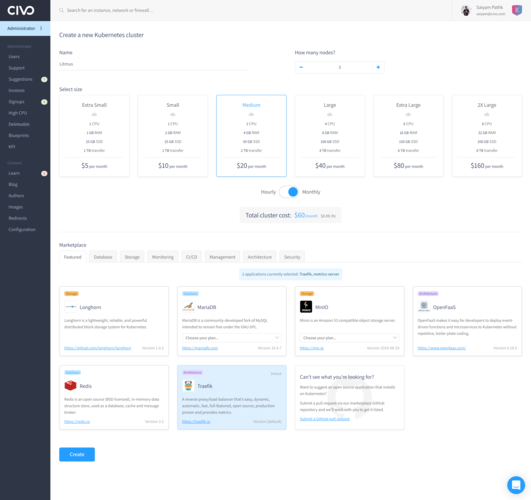

- A Kubernetes cluster you control. We'll take advantage of Civo's super-fast managed k3s service to experiment with this quickly.

- kubectl installed, and the

kubeconfigfile for your cluster downloaded.

Getting up and running with Litmus

Once you have the Kubernetes cluster ready, install the LitmusChaos Operator:

$ kubectl apply -f https://litmuschaos.github.io/litmus/litmus-operator-v1.9.0.yaml

namespace/litmus created

serviceaccount/litmus created

clusterrole.rbac.authorization.k8s.io/litmus created

clusterrolebinding.rbac.authorization.k8s.io/litmus created

deployment.apps/chaos-operator-ce created

customresourcedefinition.apiextensions.k8s.io/chaosengines.litmuschaos.io created

customresourcedefinition.apiextensions.k8s.io/chaosexperiments.litmuschaos.io created

customresourcedefinition.apiextensions.k8s.io/chaosresults.litmuschaos.io created

This installs all the required Custom Resource Definitions and Operator. You should be able to see Litmus running in its own namespace:

$ kubectl get pods -n litmus

NAME READY STATUS RESTARTS AGE

chaos-operator-ce-56449c7d75-lt8jc 1/1 Running 0 90s

$ kubectl get crds | grep chaos

chaosengines.litmuschaos.io 2020-11-06T14:23:59Z

chaosexperiments.litmuschaos.io 2020-11-06T14:24:00Z

chaosresults.litmuschaos.io 2020-11-06T14:24:00Z

$ kubectl api-resources | grep chaos

chaosengines litmuschaos.io true ChaosEngine

chaosexperiments litmuschaos.io true ChaosExperiment

chaosresults litmuschaos.io true ChaosResult

Below are the 3 CRDs (Definitions taken from the official repository):

ChaosEngine: A resource to link a Kubernetes application or Kubernetes node to a ChaosExperiment. ChaosEngine is watched by Litmus' Chaos-Operator which then invokes Chaos-Experiments

ChaosExperiment: A resource to group the configuration parameters of a chaos experiment. ChaosExperiment CRs are created by the operator when experiments are invoked by ChaosEngine.

ChaosResult: A resource to hold the results of a chaos-experiment. The Chaos-exporter reads the results and exports the metrics into a configured Prometheus server.

Now It's the time to create some chaos experiments!

Step 1 - Prepare your cluster

Create a new namespace, demo and Service Account (sa.yaml) that can be used by the chaos engine with below contents:

---

apiVersion: v1

kind: Namespace

metadata:

name: demo

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: chaos-sa

namespace: demo

labels:

name: pod-delete-sa

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: chaos-sa

namespace: demo

labels:

name: chaos-sa

rules:

- apiGroups: ["","litmuschaos.io","batch","apps"]

resources: ["pods","deployments","pods/log","events","jobs","chaosengines","chaosexperiments","chaosresults"]

verbs: ["create","list","get","patch","update","delete","deletecollection"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: chaos-sa

namespace: demo

labels:

name: pod-delete-sa

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: chaos-sa

subjects:

- kind: ServiceAccount

name: chaos-sa

namespace: demo

Apply this to your cluster:

kubectl apply -f sa.yaml

namespace/demo created

serviceaccount/chaos-sa created

role.rbac.authorization.k8s.io/chaos-sa created

rolebinding.rbac.authorization.k8s.io/chaos-sa created

You can see above has created a role, a rolebinding to modify the Litmus CRDs and Kubernetes deployments tied to the namespace demo.

Step 2 - Install experiments

Chaos experiments contain the actual details for chaos events to be triggered. There are experiments already listed on chaos hub that can be readily installed onto the cluster. For now we will install generic experiments and use pod-delete and pod-autoscaler which we will need to get onto our cluster:

kubectl apply -f https://hub.litmuschaos.io/api/chaos/1.9.0?file=charts/generic/experiments.yaml -n demo

Both the experiments mentioned above, and some others too, get created:

kubectl get chaosexperiments -n demo

NAME AGE

pod-delete 10m

pod-network-duplication 19s

node-drain 19s

node-io-stress 18s

disk-fill 17s

node-taint 17s

pod-autoscaler 16s

pod-cpu-hog 16s

pod-memory-hog 15s

pod-network-corruption 14s

pod-network-loss 13s

disk-loss 13s

pod-io-stress 12s

k8-service-kill 11s

pod-network-latency 11s

node-cpu-hog 10s

docker-service-kill 10s

kubelet-service-kill 9s

node-memory-hog 8s

k8-pod-delete 8s

container-kill 7s

Step 3 - Create Deployment and Chaos Engine for pod-delete

Let's start a simple 2-replica ngnix deployment in our demo namespace that we can run our experiments on.

$ kubectl create deployment nginx --image=nginx --replicas=2 --namespace=demo

deployment.apps/nginx created

Then, let's create a pod_delete.yaml that we can apply as a ChaosEngine to our cluster:

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: nginx-chaos

namespace: demo

spec:

appinfo:

appns: 'demo'

applabel: 'app=nginx'

appkind: 'deployment'

annotationCheck: 'false'

engineState: 'active'

auxiliaryAppInfo: ''

chaosServiceAccount: chaos-sa

monitoring: false

jobCleanUpPolicy: 'delete'

experiments:

- name: pod-delete

spec:

components:

env:

- name: TOTAL_CHAOS_DURATION

value: '30'

- name: CHAOS_INTERVAL

value: '10'

- name: FORCE

value: 'false'

Apply this to our cluster:

$ kubectl apply -f pod_delete.yaml

chaosengine.litmuschaos.io/nginx-chaos created

In above if the annotationCheck is true, then you need to annotate your deployment with

kubectl annotate deploy/nginx litmuschaos.io/chaos="true" -n demo to make it work.

After the ChaosEngine is created, it will create 2 new pods which will in turn start to terminate pods from our nginx deployment, which is the motive of this experiment.

$ kubectl get pods -n demo

NAME READY STATUS RESTARTS AGE

nginx-f89759699-kxrbc 1/1 Running 0 83s

nginx-chaos-runner 1/1 Running 0 25s

pod-delete-up8kop-zmgjx 1/1 Running 0 11s

nginx-f89759699-p7cwq 0/1 Terminating 0 83s

nginx-f89759699-j2swb 1/1 Running 0 5s

to check the status/result of the experiment, describe the chaosresult:

kubectl describe chaosresult nginx-chaos-pod-delete -n demo

You should see something like this:

Step 4 - Create Deployment and Chaos Engine for pod-autoscale

As we already have our nginx deployment created, we just need to create the ChaosEngine:

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: nginx-chaos

namespace: demo

spec:

# It can be true/false

annotationCheck: 'false'

# It can be active/stop

engineState: 'active'

#ex. values: ns1:name=percona,ns2:run=nginx

auxiliaryAppInfo: ''

appinfo:

appns: 'demo'

applabel: 'app=nginx'

appkind: 'deployment'

chaosServiceAccount: chaos-sa

monitoring: false

# It can be delete/retain

jobCleandUpPolicy: 'delete'

experiments:

- name: pod-autoscaler

spec:

components:

env:

# set chaos duration (in sec) as desired

- name: TOTAL_CHAOS_DURATION

value: '60'

# number of replicas to scale

- name: REPLICA_COUNT

value: '10'

Apply this yaml file to your cluster, and you should see it report back with chaosengine.litmuschaos.io/nginx-chaos configured.

We have made the replica count to 10, so the pods should automatically scale to 10 replicas. This is a very interesting experiment as it can be used to check node autoscaling behaviour. It also shows the people behind Litmus are very responsive to the community, as this experiment came about because of a suggestion by me!

Once we apply the file, we should see the pod replicas going to 10:

$ kubectl get pods -n demo

NAME READY STATUS RESTARTS AGE

nginx-f89759699-j2swb 1/1 Running 0 16m

nginx-f89759699-klwn6 1/1 Running 0 16m

nginx-autoscale-runner 1/1 Running 0 17s

pod-autoscaler-fa841p-mtqzn 1/1 Running 0 10s

nginx-f89759699-cz9n5 0/1 ContainerCreating 0 4s

nginx-f89759699-lp25g 1/1 Running 0 4s

nginx-f89759699-brtxn 1/1 Running 0 4s

nginx-f89759699-wwzjd 1/1 Running 0 4s

nginx-f89759699-8jqp9 1/1 Running 0 4s

nginx-f89759699-tp7wp 1/1 Running 0 4s

nginx-f89759699-wcqbc 1/1 Running 0 4s

nginx-f89759699-f2pph 1/1 Running 0 4s

As with the pod deletion experiment, we can use kubectl describe to get more detail about the results. The command will be kubectl describe chaosresult nginx-chaos-pod-autoscaler -n demo.

Wrapping up

Litmus is a really good tool with great community backing and a growing number of experiments. In very little time you can deploy it to the cluster and start creating chaos to make your Kubernetes applications ready for any kind of failure.

All experiments are listed here at https://hub.litmuschaos.io/, where you can raise issues for new experiments and contribute them as well.

Let us know on Twitter @Civocloud and @SaiyamPathak if you try Litmus on Civo!